Harnessing the Power of GPT Functions in Convoworks

The relentless march of artificial intelligence continues to evolve, with OpenAI leading the charge. Their latest advancements in function calling have paved the way for more dynamic developer interactions. Today, we’re excited to unveil the Convoworks GPT Package v.0.5.0, which seamlessly incorporates these state-of-the-art functionalities.

A Deep Dive into OpenAI’s GPT Function Calling

OpenAI’s breakthroughs in function calling herald a new age for conversational AI. With chat-based models like gpt-3.5-turbo and gpt-4, developers can achieve more than just generating conversational replies. But what does this entail?

The Essence of Function Calling in GPT

Function calling empowers GPT models to identify and act upon specific user requests, producing a structured description for the intended action. For example, when a user inquires about the weather, instead of merely returning a conversational reply, the model can produce a JSON structure that prompts a ‘get_weather’ function call.

This approach’s ingenuity stems from its adaptability. Developers can:

- Integrate External APIs: Craft chatbots that connect with external APIs, ranging from weather and news to e-commerce platforms.

- Integrate with their own systems: Encapsulate access to system-specific data and logic into custom functions that GPT can invoke and utilize.

- Translate Natural Language to API Calls: Enable GPT models to convert general user queries into precise API requests, bridging the divide between natural human language and machine instructions.

Function Calling Workflow

- A user submits a query with defined functions to the model.

- Based on the input, the model might opt to invoke a function, replying with a JSON string detailing the function and its parameters.

- Developers then parse this string into a JSON object to execute the intended function.

- The function’s result can be relayed back to the GPT model for an optimized and user-friendly summary or answer.

Flexibility in Function Calls

GPT models aren’t confined to singular function calls. They can opt for sequential or combined function calls before generating a user-friendly response. Although the model usually determines the optimal route autonomously, developers can guide its behavior by specifying particular functions or user prompts.

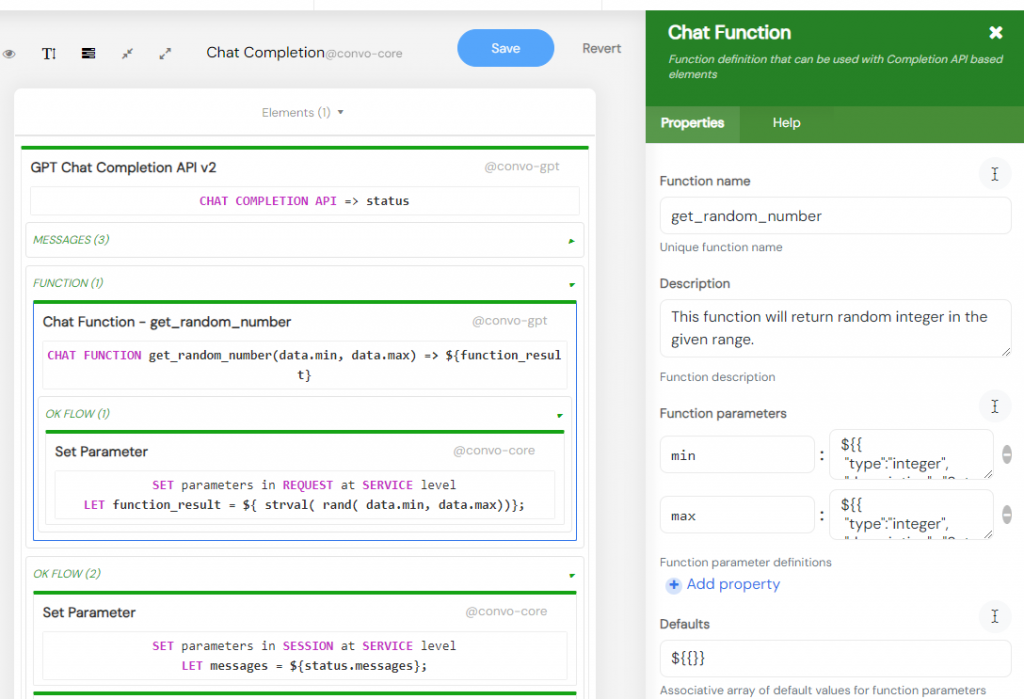

Harnessing OpenAI Functions in Convoworks

So, how does Convoworks GPT Package tap into this potential? It’s straightforward. The GPT function is characterized as an element, allowing for multiple registrations simultaneously. When the GPT model discerns a function call based on user input, the corresponding function component springs into action. This triggers its subflow components (or elements), which then execute the specified logic. With direct access to function arguments, these subflow components carry out the necessary operations and set the function’s return value. However, developers should be wary of API token limits, ensuring that function outputs remain concise to avoid excessive token usage.

In this example, we utilize the PHP functions strval() and rand() through the Expression Language. See which other PHP functions are supported in our Core Package documentation. For available WordPress functions, refer to the WP Core Package documentation.

GPT Example Chat – Template Service

The GPT package comes pre-loaded with a template service, providing an ideal foundation for your projects. This service encapsulates fundamental principles necessary to kickstart your smart chatbot journey:

- Function calling: It encompasses a single custom function,

get_random_number(min, max), designed for random number generation. - Dynamic system context: It showcases how system messages (main prompts, additional contexts) can be segmented and dynamically crafted or omitted as needed.

- Limiting the conversation size: Given the inherent context window constraints of LLMs, our mechanism aggregates the oldest messages into a concise summary, allowing extended interactions without losing critical information.

- Message moderation: Prior to forwarding messages to GPT, they undergo checks for content violations like hate speech, ensuring compliance with OpenAI’s terms of service.

Check out the video below to see how you can try the GPT Example Chat for yourself.

Notices for Trying Out the GPT Example Chat:

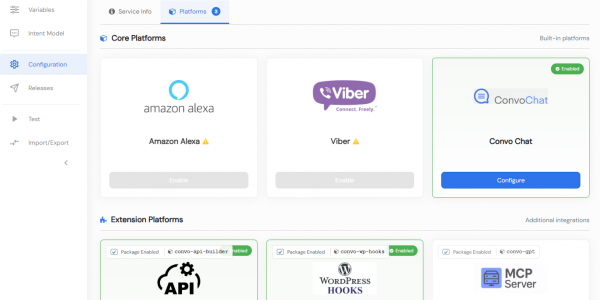

- In the Variables view, enter your OpenAI API key for the

GPT_API_KEY. - If you want to enable it on the front page, go to the Configuration view and enable the Convo Chat platform.

- The shortcode for invoking the chat is:

[convo_chat service_id="your-service-id" title="Your Title"].

What More Can You Anticipate?

While the “GPT Example Chat” template elucidates core principles and offers an excellent project blueprint, we will be rolling out several other use cases in the coming period:

- Appointment scheduling and newsletter subscription functionalities.

- A Q&A bot proficient in semantic website searches.

- A WordPress admin bot geared towards aiding your website maintenance endeavors.

Conclusion

The Convoworks GPT Plugin v.0.5.0 isn’t merely an upgrade—it’s a monumental leap into the future. Merging OpenAI’s innovations with Convoworks’ robust architecture paves the way for crafting unmatched chatbot experiences on WordPress.

Related posts

Convoworks WP 0.24 – A Faster, Cleaner Editor on the Road to Version 1

Convoworks WP 0.24.00 enhances the editor with faster navigation, improved component search, and refreshed help documentation. It introduces secure API key storage and service management improvements, while refining the editor’s design for better usability. Deprecated platforms have been removed to streamline the core.

VIEW FULL POST

Convoworks, 2025 – Status and Next Steps

Convoworks started as a voice-workflow tool, survived the collapse of smart-speaker hype, and is now evolving into a modular, AI-first framework for WordPress. This update explains the cleanup in progress, the move toward agent infrastructure and natural-language building, and how you can follow along while v1 takes shape.

VIEW FULL POST